Models of sensory coding

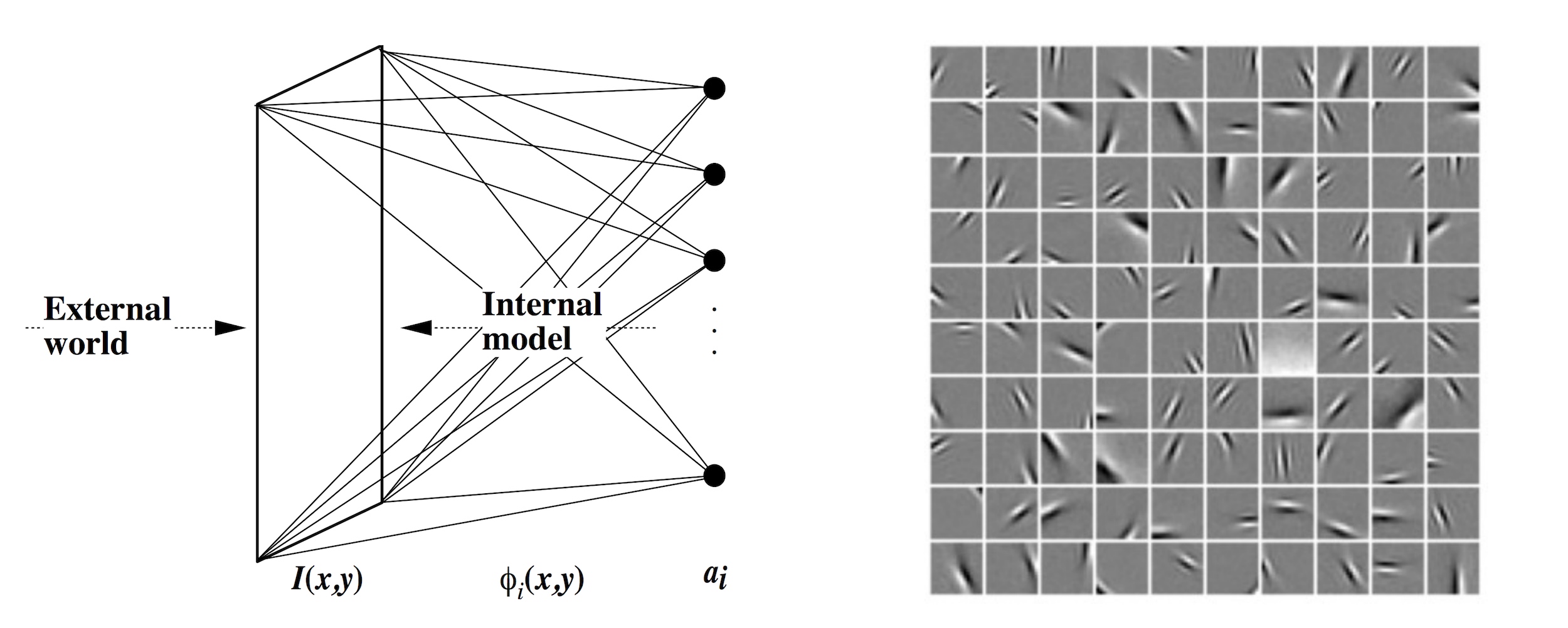

The process of perception requires an animal to integrate sensory information from a variety of organs in order to build a model of the world sufficient for survival. The signals from each of these senses possess somewhat specific statistical structure – a core theory in sensory neuroscience is that neurons adapt on a range of timescales to the structure in these signals. We work on both the characterization of natural signals and on developing models of sensory neural coding that can learn representations from data. Our efforts have focused primarily on unsupervised learning using principles of sparse coding and information theory. We are particularly interested in the roles of feedback and hierarchy in building representations of sensory data. While some theories of sensory coding are fairly general, the majority of our past and present work in this area has focused on models of the visual and auditory system.

In addition to modeling neural representation, we are investigating the potential for some of these models to serve as the basis for data compression schemes. We believe that neuroscience and psychophysics has a lot to offer in determining 1) good ways to sample sensory signals and 2) how to compactly represent the relevant information they contain. Given the amount of visual and audio data stored online, we believe that this may become an important application for our work in this area.

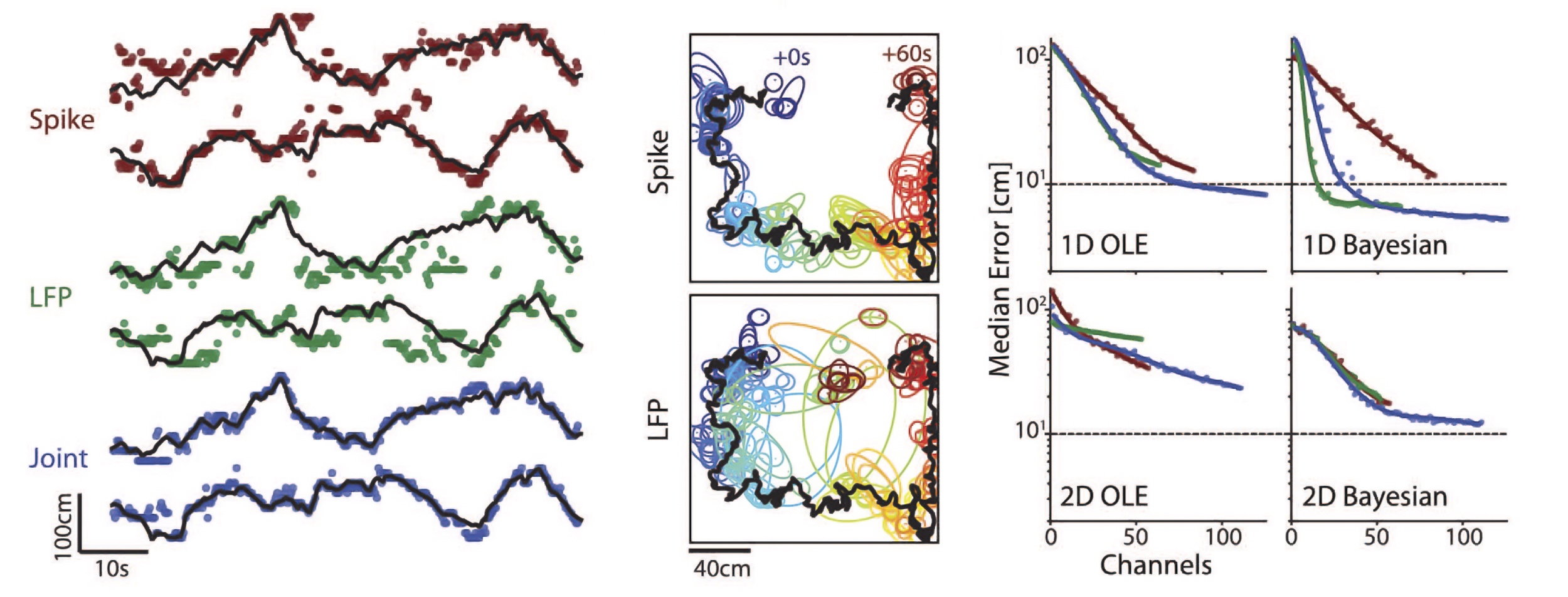

Analysis and modeling of neuroscience data

The quality and variety of neuroscience measurement techniques has dramatically improved over the last few decades and researchers now have access to huge multivariate neural datasets across a large range of spatial and temporal scales. Statistical methods for drawing meaningful conclusions from this data are still catching up to these innovations, and we are contributing to this effort. Our work is in both developing such methods and in applying them to the analysis of neural data, in particular in the hippocampus and visual cortex. Past work in this area has for example included an analysis of bi-modal firing behavior in thalamic relay cells, models of higher-order correlations within microcolumns in the primary visual cortex, and the discovery of place fields encoded by local field potential signals. The Redwood Center is also engaged in neuroinformatics and runs the Collaborative Research in Computational Neuroscience project (CRCNS) which hosts a wide variety of neuroscience datasets along with collaborative tools for sharing these datasets with the neuroscience community.

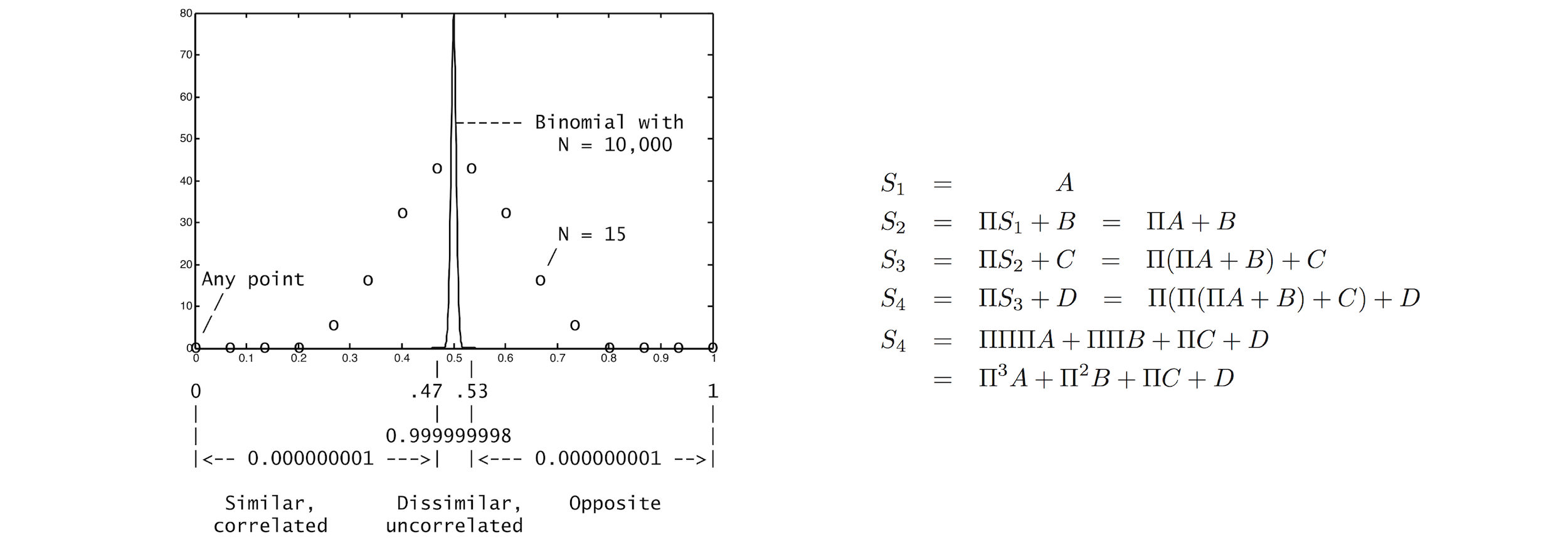

Computing with high-dimensional distributed representations

Several current projects at the Redwood Center are investigating theories of computation that utilize high-dimensional vectors as the atomic unit of representation. Whereas a modern computing architecture operates on 32 or 64 bit words, our theories rely on words that are 1000 or more bits long. Furthermore, information is distributed evenly across all the bits in a given word – systems that utilize this kind of representation exhibit behavior that is robust to significant perturbations to the underlying words. Models of vector symbolic algebra define a mathematical formalism for computing with these high-dimensional distributed representations and can be understood as abstractions of certain properties of real neural systems. What interests us about this work from a neuroscience perspective is that it represents an example of how high dimensional distributed representations like those found in the brain might be able to support robust symbolic computation.

This research also demonstrates a new paradigm for computing that may address challenges posed by the end of Moore’s Law. Collaborations we have with academic and industrial groups developing next-generation computing architectures have been centered on building efficient implementations of these models and early results demonstrate significant advantages over existing approaches. Physical systems that implement these models enjoy improvements in performance, energy efficiency, and robustness with simple algorithms that learn fast. We think this may be a path towards building the next generation of computing hardware.

Understanding neural networks

One long-standing research direction within theoretical neuroscience is the study of networks of artificial neurons which capture at some level the properties of neurons measured in real brains. This allows us to both investigate what kinds of tasks these networks are good at performing and also what might be missing from our current models. Over the past decade there has been a growing acceptance of the amazing capabilities of neural network models as general computational objects that can learn to represent and store patterns found in data from a wide variety of natural signals. While the applications of these models are compelling, we are far more interested in why these systems behave the way they do. We are attacking this question using ideas from random matrix theory, dynamical systems theory, and statistical mechanics.

Mainstream use of neural networks outside the field of neuroscience is based on a model of neurons that is now more than sixty years old. Neurons in real brains are capable of a much richer set of computations than these older models utilize. One of our objectives is to demonstrate the utility of using neural network models that incorporate a more modern view of neural computation.

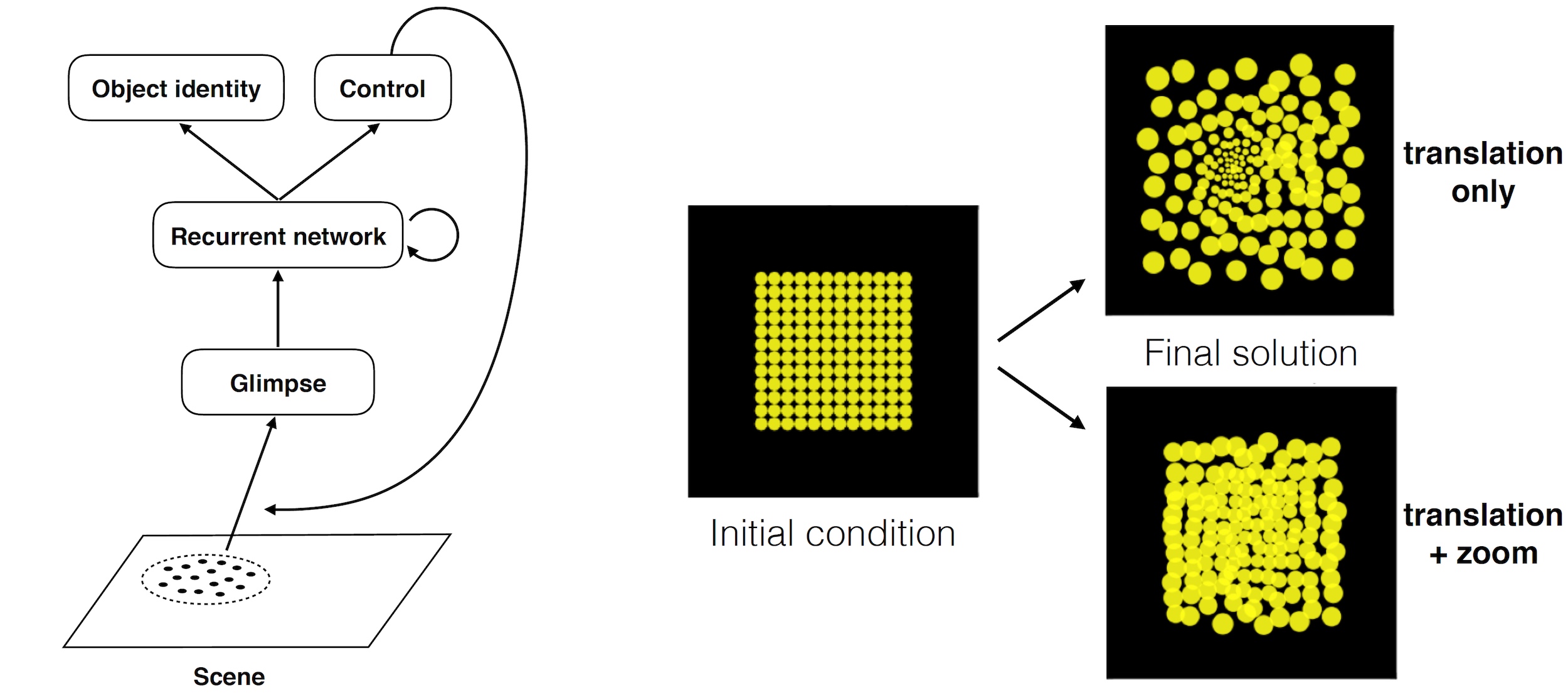

Active Perception

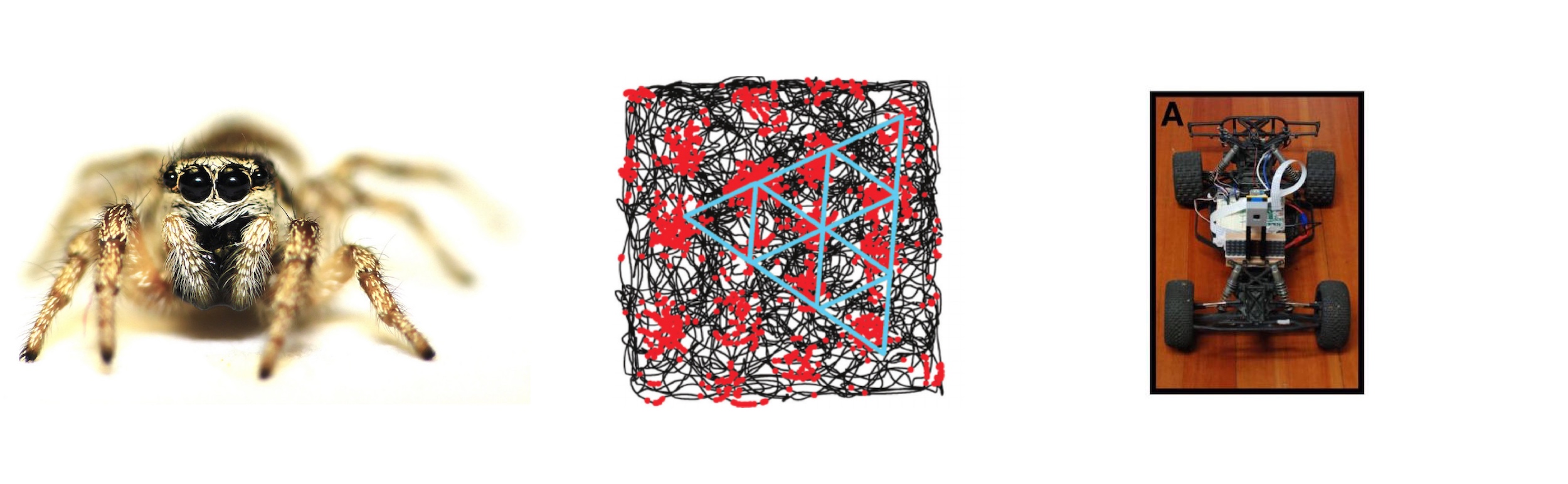

Much of the past work on perception has carried with it the implicit assumption of a passive observer collecting sensory information from the world. This reductionist approach, while valuable, leaves out a key functionality of biological visual systems which may be critical to understanding it as a whole – i.e., the purposeful and active acquisition of information about the world through eye, head and body movements.

As these movements occur, the information acquired from different fixations must somehow be assimilated and stored in working memory in order to enable actions that go beyond simple reflexes based on the immediate input. We are studying this problem along several different lines:

- Theories of attention that address where one should look or move

- Theories of optimal signal acquisition given constraints imposed by active perception

- Theories of working memory and representation that can build up a stable percept of the world from multiple fixations over time

In all of these works, our objective is to develop a neural architecture that functions in a robust and biologically-relevant manner.

Autonomous Systems

Humans and other animals are the only systems in existence capable of truly autonomous behavior despite decades of research on this topic within the robotics and computer science communities. We believe that sensing, navigating, remembering and acting in a rich three-dimensional environment is fundamentally more difficult than many researchers first realized and that a key component of deconstructing this problem is the study of nervous systems that have already solved it. Work from the neuroscience community that has something to offer here includes theories of sensorimotor loops, feedback, memory, and neural encodings of position and orientation in three-dimensional space. Our objective is to combine some of these theories with ideas from engineering, in particular Simultaneous Localization and Mapping to design systems that are robust, efficient, and general-purpose.